Overview

Just like an artificial flower isn’t a real flower, artificial intelligence isn’t real intelligence. Then what is AI? To develop a deeper understanding of AI, especially large language models (LLMs), I’ve been reading extensively, including news articles, research papers, thought leadership blogs, and overhyped videos, as well as a few books. I’ve also completed several online courses.

Unfortunately, much of the content online is not helpful. Too many make big claims, overhype the potential, and recycle the same talking points to get views or attention.

Ironically, it was the generic AI tools that helped me understand AI better than most other resources. Unlike books or videos, they are not a one-way communication. I could ask questions and get explanations in simple language. I usually ask them to explain complex ideas as if I were a 10-year-old. I follow up with as many cross questions as I need. The best part is, they never get tired or impatient. They just keep explaining, like some of the best teachers I have had in my life — patient, clear, and genuinely helpful.

Another source of knowledge in my learning journey is Andrej Karpathy. He was the Director of AI at Tesla and a founding member of OpenAI. His content stands out because it’s grounded. No hype, no ego. Just clear thinking, shared from first principles. That’s the approach we believe in at Contify too.

On June 17, 2025, Andrej gave a talk at AI Startup School in San Francisco titled “Software Is Changing (Again).”

In this article, I’ve tried to adapt some of the key ideas from that talk to a domain I work in every day: market and competitive intelligence platforms. I’ll explore what this technology actually is, how it’s reshaping the way we work, and what we should realistically expect from it.

Mental Models to Understand LLMs

Before we start using this new technology for serious business, we need to pause and ask: What exactly are we actually working with? Without a clear understanding, we risk misusing it, underutilizing it, or expecting too much from it.

To make sense of something as complex as large language models, I’ve found that simple analogies work best. So let’s break it down using analogies and develop a few clear mental models.

LLMs Are Like Electricity

In 2017, Andrew Ng said, AI is the new electricity. He predicted that just as electricity transformed nearly every industry a century ago, AI would do the same in the years to come.

At the time, it felt like a bold metaphor. Today, it’s starting to feel literal, especially when you see how LLMs are being used and consumed across industries.

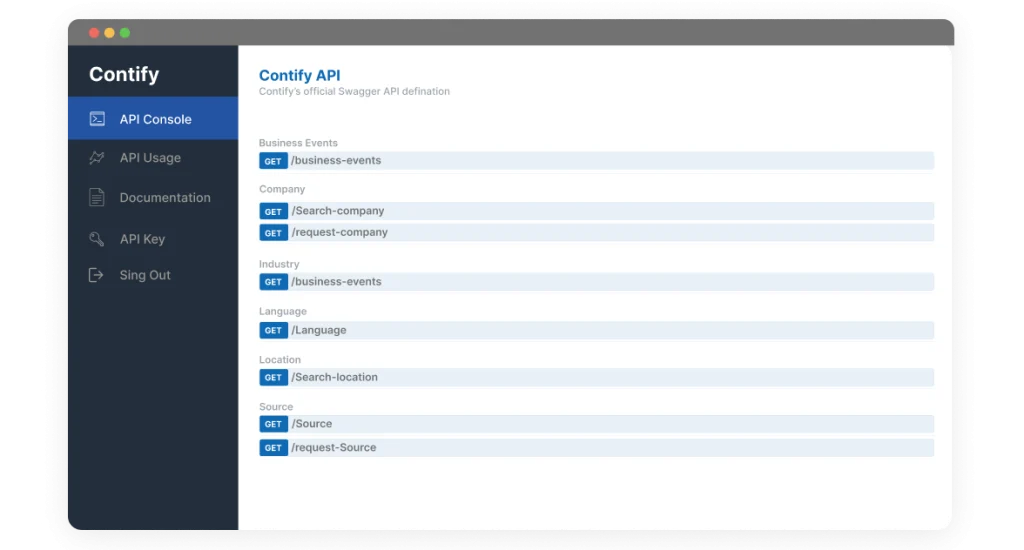

Companies like OpenAI, Anthropic, and Google are investing heavily in training these models, similar to building a power plant. They then offer access to language tokens (basically, words in a language) through APIs. And we pay for those tokens (per million tokens) just like we pay for electricity (per kilowatt-hour).

Just like electricity, API users expect predictable performance, that is, reliability, low latency, and high uptime, so that they can build useful applications. These aren’t just “nice to have” expectations; they’re baseline requirements for applications built over these new technologies.

When ChatGPT went down recently, it wasn’t just a technical outage. It was an intelligence blackout. Work stalled. Dashboards went blank. Teams got stuck. It was an important reminder that we’re building applications on a new kind of infrastructure, and we need to treat it with the same seriousness as electricity.

There is one key difference. Unlike hardware for electricity, LLMs don’t compete for physical space. So, we can have multiple options and swap them instantly. For example, at Contify, we use Llama, Gemini, OpenAI, and various embedding models across various components of the platform, selecting the most suitable models based on requirements, performance, and cost.

LLMs Are Like Time-Shared Mainframes

Another mental model to think about today’s LLMs is to imagine we’ve gone back to the 1960s. LLMs are like centralized mainframes, not personal computers.

These are not just new tools, they’re an entirely new class of computing. And just like the mainframes of the past, they’re powerful, centralized, and also expensive to run.

Most of us don’t load LLMs on our servers. We interact with them over the internet. We submit our queries, wait for our turn, and get back a response, just as users did 60 years ago with “time-shared” mainframe computers.

The LLM runs in a data center. We’re thin clients on the edge. Running LLMs is expensive; therefore, it makes sense to centralize them, similar to mainframe computers, and distribute access via APIs.

Until LLMs become far more efficient, using them on personal devices, such as laptops, will remain out of reach.

That said, I’m very bullish about the potential of small language models (SLMs), especially when they’re fine-tuned and optimized for your specific use cases. Several recent studies have shown that smaller models, when trained on focused tasks, can outperform larger models on accuracy, efficiency, and responsiveness. They’re also easier to deploy on your secured servers, making them a strong candidate for enterprise AI applications where control and customization matter most.

LLMs Are Like People Spirits

LLMs can talk like humans. They remember more facts than any of us. They can hold a conversation across domains and write with clarity and structure. But don’t confuse that for real intelligence.

They are people-pleasers by design. Trained to be helpful and agreeable. That’s why they say “yes” more than they should, and when they don’t know something, they’re more likely to make up an answer instead of admitting “I don’t know.”

We’ve all seen examples where they fabricate citations or URLs that look perfectly real, inventing non-existent papers, court cases, or product specs when asked for “sources”. They’re optimized to produce plausible text, not to validate truth.

What we see is a simulation of how humans use language. LLMs are trained on massive amounts of internet text. They have identify and memorize patterns (weights) in how humans speak and write. They generate text, but they don’t understand it.

They don’t understand the physical world the way we do. Their core strength lies in predicting the next word based on the patterns they have memorized. They don’t build mental models like what we are doing right now. No physical intuition. Just the ability to predict the next word based on patterns they’ve seen. That prediction engine is powerful—it makes their responses sound intelligent.

They have near-infinite memory but no real understanding. No awareness of what’s right or wrong. That’s why they can hallucinate, which is just a fancy word for “they make up things”. They might solve a complex math problem and still claim that 9.11 is greater than 9.9. And that makes us question even their correct answers.

Another reason LLMs feel more like spirits than real people is their limited working memory, called a context window. Even advanced models with large context windows can only hold a conversation for a limited time, and only within a single session. Once the session ends, everything resets.

It’s not like talking to a colleague who remembers your company, your past conversations, and gets better over time. It’s more like the main character in Memento, who forgets everything that happened yesterday.

LLMs don’t have real long-term memory. What they “know” is frozen in their model weights. What they “remember” lives only in the current session. They don’t learn from experience the way humans do.

LLMs Are Like Operating Systems

If electricity explains the infrastructure, and people’s spirits explain the behavior, then this mental model helps us understand how the entire ecosystem is evolving.

Think of LLMs as a new kind of operating system (OS).

They’re not just utilities like electricity that flow through wires. They are becoming complex software environments. In this model, the LLM is the CPU. It is the core compute layer with memory (the context window), processing (token generation), and orchestration.

Your input prompt lives in the working memory (context window), where the LLM reads it and generates output, token by token, just like CPU cycles.

Closed models such as GPT and Claude are the Windows and macOS equivalents. Open models like LLaMA and Mistral are like Linux.

And to actually get work done on those OS, you use business applications like CRMs or ERPs, purpose-built tools with user interfaces, workflows, and connectors that integrate with the rest of your systems.

Can you interact directly with the OS? Sure, you can open a terminal and type commands to interact directly with the OS. But that’s not how most people work. You wouldn’t run your CRM from a command line.

Generic AI tools such as ChatGPT or Claude are like those terminal windows, a text-based interface to powerful systems. But without a user interface, with no workflows or shared memory across sessions, and no knowledge of your organization.

Trying to run intelligence business functions directly through raw prompts is like managing enterprise software from a command line. It’s technically possible, but practically exhausting.

Enterprises Need Purpose-Built Apps, Not Generic LLM Tools

LLMs are powerful, but using ChatGPT or Claude directly through text-based windows is ineffective. To use them well, you need applications with user interfaces, structured workflows, and working memory.

This is even more important in functions like market and competitive intelligence, which has complex workflows. You need an application to design structured workflows, persistent working memory, and integrations with other internal systems.

To succeed in the fast-moving, competitive landscape, you need more than prompts. You need systems that remember your context, connect with your existing tools, and understand the specifics of your business—your strategic priorities, your competitors, and what matters most to your teams.

Let’s understand how these ideas come together in a real business application with the example of Contify’s AI, Athena.

Athena is an application built on top of LLMs, designed for market and competitive intelligence workflows. It doesn’t replace the LLMs. It adds what generic tools miss: orchestration, organizational memory, user interfaces, and human-in-the-loop workflows.

Here’s how Athena transforms raw LLM capabilities into a business-ready application.

1. Built with Organizational Context

Generic LLM tools don’t know what’s important to your business. They don’t know your business priorities, your competitors, what relevance means for your stakeholders, or what innovation means for your organization.

In competitive intelligence, relevance is always measured from the context of your business objectives, not by generic definitions.

Take innovation, for example. It could mean new product features. Or a pricing shift. Or a new channel strategy. Or a technology change. The definition isn’t universal. It’s always contextual.

To use LLMs effectively, you need to provide this context. Expecting users to provide this context in every prompt, every time they interact with generic AI tools, is not realistic.

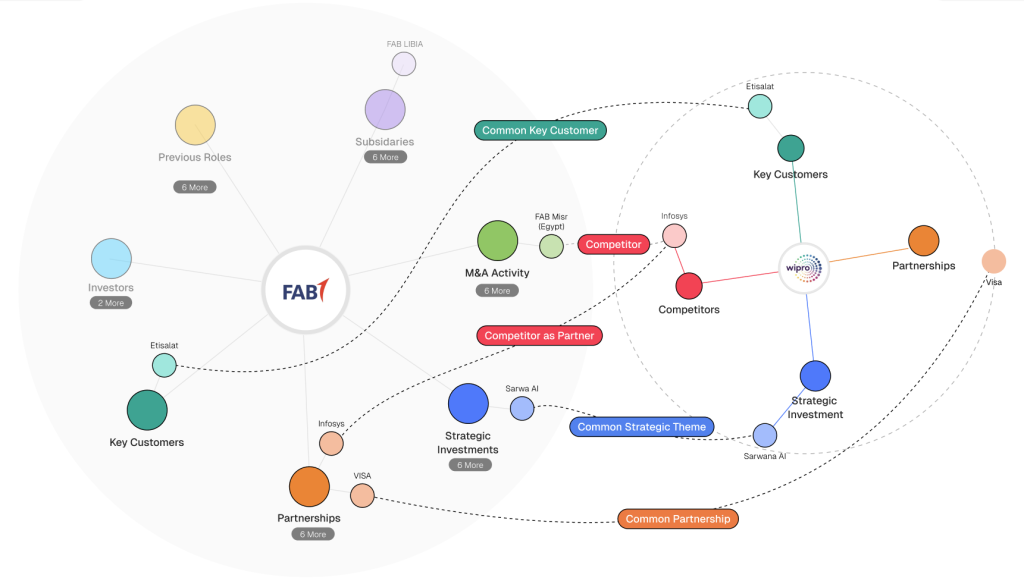

Athena solves this by storing and applying your organization’s context automatically by applying Knowledge Graphs. It utilizes your custom taxonomies, strategic focus areas, industry segments, competitor list, and internal knowledge base when using LLMs to generate insights.

This means every insight Athena delivers is not just grounded in facts, it also has the context of your organization.

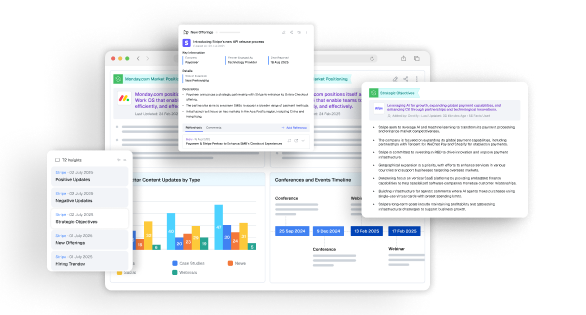

2. A User Interface Built for M&CI Workflows

Using ChatGPT like a terminal window—text in, text out, blank slate every time—might work for a quick prototype. But it’s not how people use business software where the goal is efficient workflows and informed decisions.

In real-world intelligence workflows, no one wants to scroll through long paragraphs trying to spot what has changed in their competitive landscape. They need to see timelines of strategic activities, side-by-side comparisons, benchmarks, competitor profiles, and live dashboards that surface trends, early signals, innovations, and disruptions, all without having to manually prompt each piece of intelligence separately.

A proper user interface isn’t just about convenience. It’s critical for speed, comprehension, and verification. That’s what is missing in most generic AI tools. The burden is on you to enter what matters and connect the dots across sessions.

Reading text is a slow and cognitively demanding process. Visualizing intelligence in a structured manner unlocks different parts of our brain and taps into real human intelligence. You see patterns more clearly, move faster, and catch early warning signals before your competitors. Unless they are also using Contify, which makes it a level playing field. 🙂

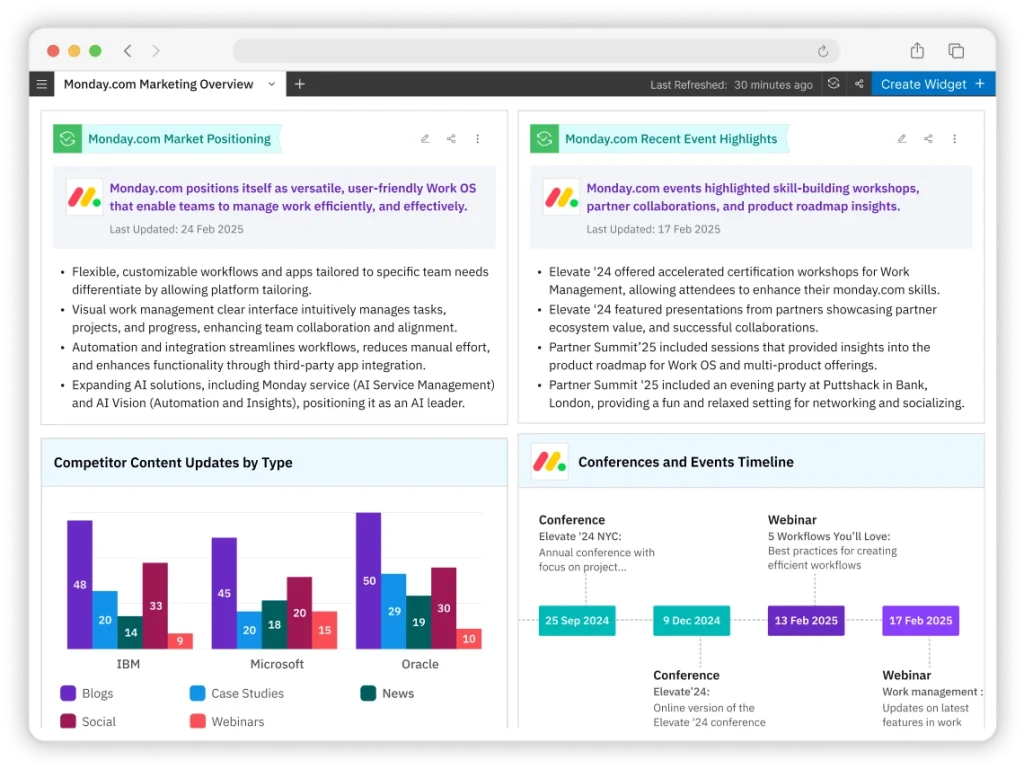

Contify provides that interface for market and competitive intelligence automation. It’s an orchestration of various components optimized for intelligence creators and users, including role-based dashboards, fact cards tied to sources, company timelines, event streams, and insight views that stay grounded in reliable facts and your organizational context.

You are not entering the same question each morning. The system already tracks what you care about and shows you what changed. You stay focused on analyzing new developments and their implications for your organization.

3. Orchestration, Not Just Prompts

Orchestration simply means you’re not starting from zero every single time. The system carries context forward, links each step to the next, and runs the entire process as a single unified flow instead of a series of disconnected prompts.

Athena doesn’t just send one prompt to a model and hand you a paragraph to read. It coordinates the entire intelligence pipeline.

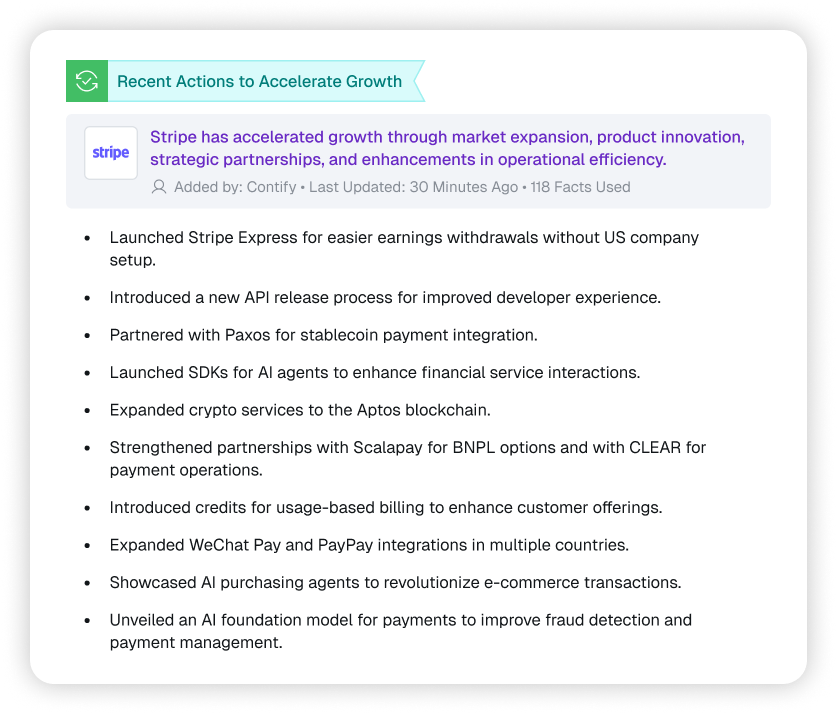

When a new update comes in, it first checks whether it’s relevant to your business. If it is, it figures out what kind of update it is—partnership, product launch, funding, hiring, regulatory, etc. If it’s a material business event, it extracts the structured data and creates facts. Those facts then roll up into insights using pre-configured insight templates that already have your organization’s context, taxonomy, competitors, and priorities. From there, related dashboards, competitor profiles, and timelines refresh automatically.

Under the hood, there is a chain of fine-tuned prompts, handpicked LLM models, and agentic workflows, which are optimized for translation, tagging, deduplication, disambiguation, fact extraction, and insight generation.

To achieve this, we’ve tested a lot of LLMs to see which ones are best for accuracy and speed, and deployed different models to handle various steps of the pipeline. There’s also a monitoring layer that continuously monitors the performance of the live system and watches for any drifts in the answers, hallucinations, or odd spikes so that issues can be flagged early.

The result: instead of typing and retyping prompts, saving fragments, copying outputs, and stitching them together yourself, you get a system that automates less-intelligent manual work. You stay focused on the analysis part—why it matters and your recommendations. The machine handles the repetitive steps. You support strategic decisions.

4. Democratizing AI Across the Organization

An intelligent organization doesn’t come from just a few people writing clever prompts. If AI is going to make a real difference, it has to work consistently for everyone, not just power users who know how to get the most out of ChatGPT. It should be part of everyday workflows, across teams, without requiring anyone to learn how to be a prompt engineer or how to evaluate LLMs.

That’s what business applications, such as Athena, do for organizations.

Instead of making each user figure out what to ask and how to ask it, Athena gives you ready-made templates for common intelligence tasks. These templates aren’t generic; they’re designed to be customized to your business, so the insights stay relevant, accurate, and aligned with your organization’s priorities.

Sales, product, strategy, and marketing teams just get the intelligence they need, in their dashboards, in their preferred format, and within the tools they already use. And those dashboards keep updating automatically. Nobody needs to remember to ask, “What has changed today?” The system tells them.

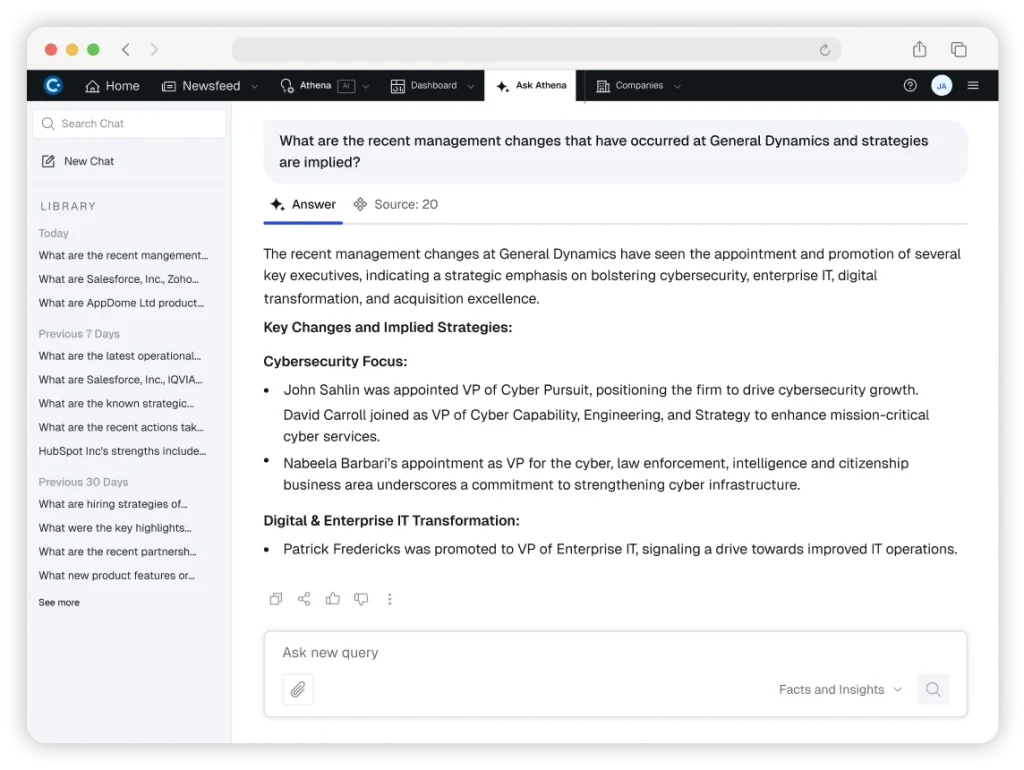

And, if someone does want to go deeper or needs to know something specific, they can. The chat interface of Ask Athena lets anyone type a question in plain English and get a fact-based, contextual response.

5. Built for Human-in-the-Loop AI

LLMs can sound confident, even when they don’t have all the facts to answer the question. The answer might read well, but there’s no easy way to know where it came from. Did it use facts from a reliable source? Or did it just make something up? That uncertainty is unacceptable when the stakes are high, such as when analyzing the competitive landscape to inform strategic decisions.

When it comes to intelligence, you don’t need answers fast. You need answers that are verified so that you can trust them.

That’s why Athena is designed with the human-in-the-loop approach at its core.

Athena is built on the principles of traceability. This is important for verifying the responses provided by LLMs. You can see the exact sources LLMs used, whether they are news articles, regulatory filings, or internal CRM notes, and what pieces of information (facts) from those sources were used. Those facts are right there, clearly mapped, so you know exactly what informed the insight.

And if something doesn’t look right, you’re in control. You can edit a fact, override the insight, or add your own context. Athena learns from that input and improves over time.

To implement human-in-the-loop, especially for strategic functions like competitive intelligence, you’ll need to ask:

- What should the LLM see, and what should it generate?

- What decisions still need human judgment?

- Where can the system operate independently?

- And where should it stay under your supervision?

Athena doesn’t replace the analyst; it takes the repetitive work off your plate, so you can focus on the work that really needs your judgment.

Final Thoughts

Back in 2013, Andrej Karpathy rode in a self-driving car through the streets of Palo Alto. The car drove perfectly. No interventions. Just a smooth, 30-minute ride. It felt like the future had arrived.

But that was more than a decade ago. We’re still working on fully autonomous cars. Not because the demo was fake, but because the final stretch—where reliability matters—is always more complicated than it looks.

Generative AI is following a similar path.

LLMs are powerful tools, but they are not intelligent in the way humans are. They don’t understand context the way we do. Human intelligence involves continuous learning, adaptation, and integration of new information through experience.

LLMs don’t learn from experience. They generate responses through statistical patterns, rather than genuine comprehension of the situation or reasoning based on an understanding of language. That’s why you need someone in the loop to verify, adjust, and apply judgment when it counts.

But do not dismiss it as just another technology hype. It is sometimes confusing, especially with the media noise and the investment community trying to justify their investments. But make no mistake, this technology is real.

That said, the most realistic and practical expectation from AI right now is partial autonomy. We shouldn’t aim to take humans out of the loop. Instead, we should aim to make humans far more effective. AI should lead to less “rote work,” allowing humans to use real human intelligence and produce more valuable work.

Successful B2B software will evolve into these partially autonomous systems. Systems where you can dial the autonomy up or down based on how much you trust the output and how high the stakes are.

When designed right, AI becomes a force multiplier. It allows teams to move faster by making informed decisions. And that’s the direction we’re building toward with Athena. If you’d like to see how Contify’s Athena can help your market and competitive intelligence program, click here to request a demo.